爱奇艺做视频网站的网页搜索引擎

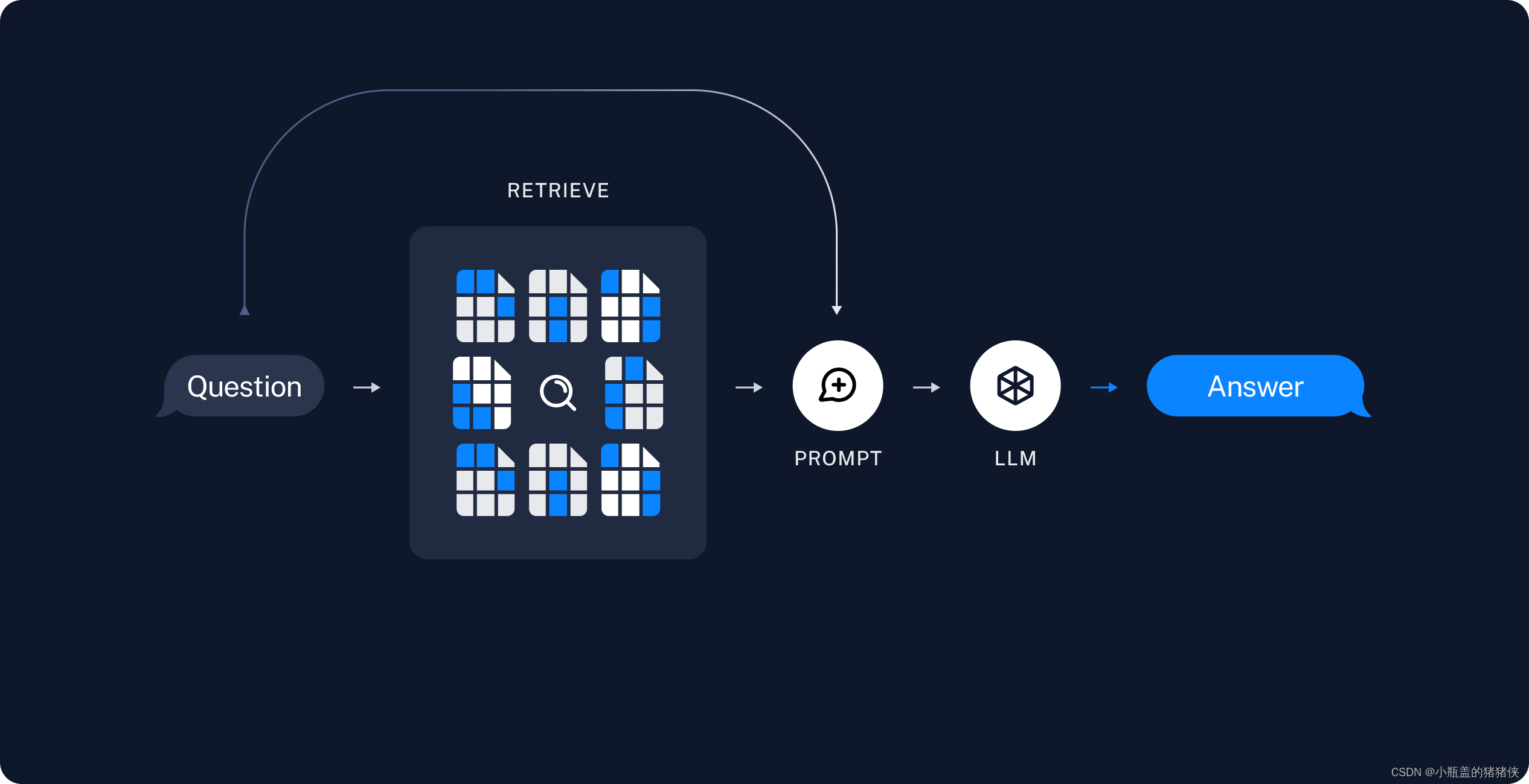

LLM 所实现的最强大的应用之一是复杂的问答 (Q&A) 聊天机器人。这些应用程序可以回答有关特定源信息的问题。这些应用程序使用一种称为检索增强生成 (RAG) 的技术。

典型的 RAG 应用程序有两个主要组件

- 索引:从源中提取数据并对其进行索引的管道。这通常在线下进行。

- 检索和生成:实际的 RAG 链,它在运行时接受用户查询并从索引中检索相关数据,然后将其传递给模型。

从原始数据到答案最常见的完整序列如下:

- 加载:首先我们需要加载数据。这是通过DocumentLoaders完成的。

- 拆分:文本拆分器将大块内容拆分Documents成小块内容。这对于索引数据和将数据传递到模型都很有用,因为大块内容更难搜索,并且不适合模型的有限上下文窗口。

- 存储:我们需要一个地方来存储和索引我们的分割,以便以后可以搜索它们。这通常使用VectorStore和Embeddings模型来完成

检索和生成

4. 检索:根据用户输入,使用检索器从存储中检索相关分割。

5. 生成:ChatModel / LLM使用包含问题和检索到的数据的提示生成答案

#创建embedding 模型

from langchain.embeddings import HuggingFaceEmbeddings

from langchain_community.vectorstores import FAISS

from langchain_community.vectorstores.utils import DistanceStrategy

from config import EMBEDDING_PATH# init embedding model

model_kwargs = {'device': 'cuda'}

encode_kwargs = {'batch_size': 64, 'normalize_embeddings': True}embed_model = HuggingFaceEmbeddings(model_name=EMBEDDING_PATH,model_kwargs=model_kwargs,encode_kwargs=encode_kwargs)#导入相关库

from langchain_openai import ChatOpenAI

import bs4

from langchain import hub

from langchain_community.vectorstores import FAISS

from langchain_community.document_loaders import WebBaseLoader

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_text_splitters import RecursiveCharacterTextSplitterchat = ChatOpenAI()loader = WebBaseLoader(web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),bs_kwargs=dict(parse_only=bs4.SoupStrainer(class_=("post-content", "post-title", "post-header"))),

)

docs = loader.load()documents = RecursiveCharacterTextSplitter(chunk_size=1000,chunk_overlap=200).split_documents(docs)vetorstors = FAISS.from_documents(documents,embed_model)retriever = vetorstors.as_retriever()promt = hub.pull("rlm/rag-prompt")promtdef format_docs(docs):return "\n\n".join(doc.page_content for doc in docs)#创建链

chain =({"context":retriever | format_docs ,"question":RunnablePassthrough()}| promt| chat| StrOutputParser()

)chain.invoke("What is Task Decomposition?")

输出结果

‘Task decomposition is the process of breaking down a problem into multiple thought steps to create a tree structure. It can be achieved through LLM with simple prompting, task-specific instructions, or human inputs. The goal is to transform big tasks into smaller and simpler steps to enhance model performance on complex tasks.’

首先:这些组件(retriever、prompt、chat等)中的每一个都是Runnable的实例。这意味着它们实现相同的方法——例如sync和async .invoke、、.stream或.batch——这使得它们更容易连接在一起。它们可以通过运算符|连接到RunnableSequence(另一个 Runnable)。

当遇到|操作符时,LangChain 会自动将某些对象转换为 Runnable。这里,format_docs转换为RunnableLambda"context" ,带有和的字典"question"转换为RunnableParallel。细节并不重要,重要的是,每个对象都是一个 Runnable。

让我们追踪一下输入问题如何流经上述可运行程序。

正如我们在上面看到的,输入prompt预计是一个带有键"context"和 的字典"question"。因此,该链的第一个元素构建了可运行对象,它将根据输入问题计算这两个值:

retriever | format_docs: 将文本传递给检索器,生成Document对象,然后将Document对象format_docs生成字符串;

RunnablePassthrough()不变地通过输入问题。

内置Chain

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplatesystem_prompt = ("You are an assistant for question-answering tasks. ""Use the following pieces of retrieved context to answer ""the question. If you don't know the answer, say that you ""don't know. Use three sentences maximum and keep the ""answer concise.""\n\n""{context}"

)prompt = ChatPromptTemplate.from_messages([("system", system_prompt),("human", "{input}"),]

)question_answer_chain = create_stuff_documents_chain(chat, prompt)

rag_chain = create_retrieval_chain(retriever, question_answer_chain)response = rag_chain.invoke({"input":"What is Task Decomposition?"})

print(response)

输出结果:

{‘input’: ‘What is Task Decomposition?’, ‘context’: [Document(page_content=‘Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like “Steps for XYZ.\n1.”, “What are the subgoals for achieving XYZ?”, (2) by using task-specific instructions; e.g. “Write a story outline.” for writing a novel, or (3) with human inputs.’, metadata={‘source’: ‘https://lilianweng.github.io/posts/2023-06-23-agent/’}), Document(page_content=‘Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.’, metadata={‘source’: ‘https://lilianweng.github.io/posts/2023-06-23-agent/’}), Document(page_content=‘Fig. 2. Examples of reasoning trajectories for knowledge-intensive tasks (e.g. HotpotQA, FEVER) and decision-making tasks (e.g. AlfWorld Env, WebShop). (Image source: Yao et al. 2023).\nIn both experiments on knowledge-intensive tasks and decision-making tasks, ReAct works better than the Act-only baseline where Thought: … step is removed.\nReflexion (Shinn & Labash 2023) is a framework to equips agents with dynamic memory and self-reflection capabilities to improve reasoning skills. Reflexion has a standard RL setup, in which the reward model provides a simple binary reward and the action space follows the setup in ReAct where the task-specific action space is augmented with language to enable complex reasoning steps. After each action a t a_t at, the agent computes a heuristic h t h_t ht and optionally may decide to reset the environment to start a new trial depending on the self-reflection results.’, metadata={‘source’: ‘https://lilianweng.github.io/posts/2023-06-23-agent/’}), Document(page_content=‘Here are a sample conversation for task clarification sent to OpenAI ChatCompletion endpoint used by GPT-Engineer. The user inputs are wrapped in {{user input text}}.\n[\n {\n “role”: “system”,\n “content”: “You will read instructions and not carry them out, only seek to clarify them.\nSpecifically you will first summarise a list of super short bullets of areas that need clarification.\nThen you will pick one clarifying question, and wait for an answer from the user.\n”\n },\n {\n “role”: “user”,\n “content”: “We are writing {{a Super Mario game in python. MVC components split in separate files. Keyboard control.}}\n”\n },\n {\n “role”: “assistant”,’, metadata={‘source’: ‘https://lilianweng.github.io/posts/2023-06-23-agent/’})], ‘answer’: ‘Task decomposition involves breaking down a complex task into smaller and simpler steps to make it more manageable. This technique allows models or agents to utilize more computational resources at test time by thinking step by step. By decomposing tasks, models can better understand and interpret the thinking process involved in solving difficult problems.’}

create_stuff_documents_chain

def create_stuff_documents_chain(llm: LanguageModelLike,prompt: BasePromptTemplate,*,output_parser: Optional[BaseOutputParser] = None,document_prompt: Optional[BasePromptTemplate] = None,document_separator: str = DEFAULT_DOCUMENT_SEPARATOR,

) -> Runnable[Dict[str, Any], Any]:_validate_prompt(prompt)_document_prompt = document_prompt or DEFAULT_DOCUMENT_PROMPT_output_parser = output_parser or StrOutputParser()def format_docs(inputs: dict) -> str:return document_separator.join(format_document(doc, _document_prompt) for doc in inputs[DOCUMENTS_KEY])return (RunnablePassthrough.assign(**{DOCUMENTS_KEY: format_docs}).with_config(run_name="format_inputs")| prompt| llm| _output_parser).with_config(run_name="stuff_documents_chain")

从源代码看出来,就是chain

create_retrieval_chain

def create_retrieval_chain(retriever: Union[BaseRetriever, Runnable[dict, RetrieverOutput]],combine_docs_chain: Runnable[Dict[str, Any], str],

) -> Runnable:if not isinstance(retriever, BaseRetriever):retrieval_docs: Runnable[dict, RetrieverOutput] = retrieverelse:retrieval_docs = (lambda x: x["input"]) | retrieverretrieval_chain = (RunnablePassthrough.assign(context=retrieval_docs.with_config(run_name="retrieve_documents"),).assign(answer=combine_docs_chain)).with_config(run_name="retrieval_chain")return retrieval_chain

create_retrieval_chain调用过程就是先检索,然后调用combine_docs_chain